中国水果产量位居全球第一[1],常见的类球状水果如柑橘、苹果和桃等的采摘工作主要依赖人工。人工采摘时能根据果实成熟程度、大小和品质选择果实以减少损耗,并能根据不同果树的特点和采摘需求采用不同的技术与工具。因人口老龄化劳动力数量减少,仅靠人工无法高效完成采摘任务,所以开发自动化水果采摘设备成为解决这一问题的重要途径。

结合计算机视觉的自动化水果采摘设备,利用采摘识别算法识别果园中的水果、判断水果品质并精确定位[2]。与传统的人力采摘相比,结合计算机视觉的自动化采摘设备具有识别速度快、精度高、成本低、提升水果采摘效率和质量等优点[3]。在采摘类球状水果时,类球状水果在二维图像中呈现接近圆形的轮廓,稳定的几何形状能帮助算法减少因形状复杂性带来的误检问题[4]。并且,类球状水果因具有规则的形状和一致的颜色分布,容易进行有效的数据增强操作,增加训练数据的多样性,提升算法的泛化能力,进一步提高设备的通用性和稳定性以更好地适应不同的果园环境。自动化采摘设备在自然环境下作业同样会遇到许多问题或要求,例如光照变化、遮挡问题、多样性、复杂性、背景干扰以及实时性要求,这些都会影响识别的准确率。为克服这些挑战,需要持续改进目标检测算法的鲁棒性以及适应性,即在有限的算力资源下,降低算法参数量,提高运行速度,更好地应用于自动化采摘设备中[5]。

近年来,针对类球状水果采摘中的目标检测问题提出了不同的算法和技术,主要分为两大类:传统目标检测算法和基于深度学习的目标检测算法。基于深度学习的目标检测算法又进一步细分为单阶段目标检测算法[6]和两阶段目标检测算法[7]。笔者在本文中对应用于类球状水果采摘的目标检测算法进行介绍,并对每类算法的研究成果进行归纳分析。

1 传统目标检测算法

传统目标检测算法[8]在识别图像或视频中特定对象的位置和边界框时通常的步骤包括:对图片进行预处理(如:缩放、灰度化或归一化)、特征提取(利用传统手工设计特征或是基于机器学习的自动学习特征)、分类或回归(确认对象类别和位置)以及利用非极大值抑制等方法对检测到的对象进一步优化和过滤。Liu 等[9]提出使用简单线性迭代聚类算法对果园图像进行超像素块分割,从超像素块中提取颜色特征确定候选区域,利用方向梯度直方图描述果实形状,用于检测、定位果实。夏康利等[10]提出基于HSV颜色空间统计特征的水果识别技术,将RGB水果图像转换为HSV颜色空间,将色调分布近似为拉普斯分布并将其作为果实的特征描述,使用Meanshift 算法[11]进行图像分割,通过计算输入的水果图像色调数据的马氏距离、并与预设的特征马氏距离进行比较,判断输入的水果类别。邹伟[12]采用工业相机获取柑橘RGB 图像,将柑橘图像的RGB 颜色空间转换为HSV颜色空间,按H、S、V三通道计算颜色直方图,利用H 通道峰值以及对应色调值对柑橘成熟度进行判断,实验证明该方法对柑橘成熟度的检测准确率在90%以上。陈雪鑫等[13]提出一种基于多颜色和纹理特征的水果识别算法,利用颜色矩算法和非均匀量化算法对图像RGB、HSV颜色空间提取颜色特征,使用局部二值化提取纹理特征,将颜色和纹理特征向量优化组合,利用BP神经网络作为分类器对样本进行训练分类,最终的实验结果表明通过多特征的结合可使分类准确率超过90.0%,高于单一特征算法的准确率。徐惠荣等[14]设计了基于彩色信息的树上柑橘识别算法,对各种天气、光照场景采集图像,并对图像进行颜色提取,利用柑橘果实、枝叶在R-B 颜色指标的差异建立柑橘识别颜色空间,利用动态阈值法将柑橘果实从背景分割,实现对树上单个或多个柑橘果实的识别。上述传统目标检测算法主要依赖于识别水果的形状、颜色等单一或组合特征。此类算法通过对背景进行建模和特征融合,提取果实信息并对其进行分割,从而在自然环境下实现对水果的有效检测。

传统目标检测算法在特定场景下表现良好,但具有依赖手工设计的特征,难以适应复杂场景和目标变化。在自然环境中,传统检测算法的表达能力和鲁棒性有限,易受到光照变化、枝叶遮挡、果实重叠等因素的影响,导致识别准确率下降。当场景变更、添加水果种类和更新特征时,需要重新设计和调整特征提取器,特殊情况下甚至需要重新训练整个系统。相比之下,基于深度学习的目标检测算法能从大量数据提取、学习到丰富的特征,具备更高的准确性和鲁棒性。当场景变更、添加水果种类时,基于深度学习的目标检测算法可以通过迁移学习、数据增强、多模型组合、特征融合以及多模态数据提高模型的识别能力与鲁棒性。

2 基于深度学习的目标检测算法

基于深度学习的目标检测算法可分为两大类:单阶段目标检测算法和两阶段目标检测算法。单阶段目标检测算法通过使用单个卷积神经网络(Convolutional Neural Network,CNN)直接预测目标位置和类别,实现端到端的检测。这种方法在保持高准确率的同时可实现快速检测,即将目标检测问题转化为回归问题,直接对目标完成位置定位与分类。在算法的训练和部署阶段,单阶段目标检测算法采用剪枝和量化等技术减小模型尺寸,适合在资源有限的移动设备或嵌入式系统中运行。两阶段目标检测算法称为基于感兴趣区域或基于区域建议的目标检测算法,这类算法运行时通常分为两个阶段:1)利用选择性搜索、区域建议网络(Region Proposal Network,RPN)[15]等方法生成大量候选区域;2)通过包含分类器和边界框回归器的网络处理,对候选区域进行目标识别与精确定位。

2.1 单阶段目标检测算法

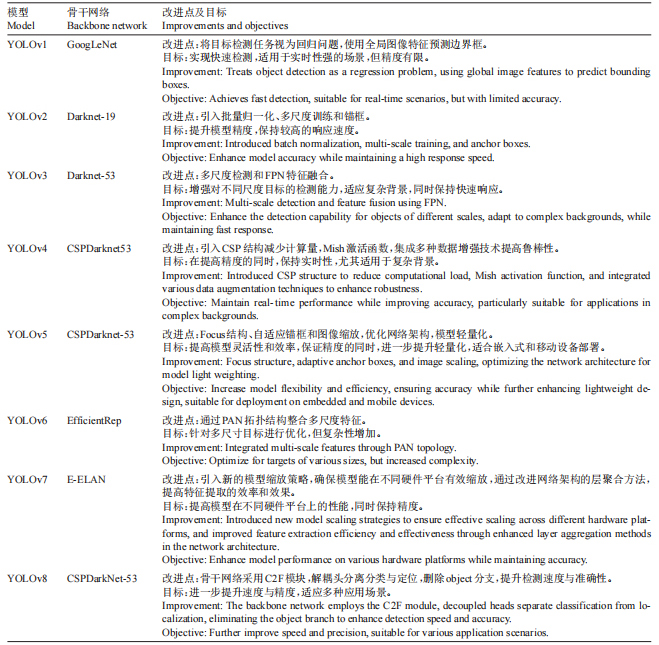

单阶段目标检测算法省略生成候选区域的步骤,直接在特征图中生成类概率和位置坐标,再进行分类回归。常见的单阶段目标检测算法有YOLO、SSD[16]、MobileNet[17]、ShuffleNet[18]、Swin-Transformer[19],其中YOLO 系列模型应用最多。Redmon 等[20]为解决两阶段目标检测算法检测速度慢、提取特征区域重复等问题提出了YOLOv1 算法,将目标检测转化为回归问题,使用全局特征预测边界框。YOLOv1通过图像均匀分割避免重复计算,提高了检测速度,适用于实时性要求高的自动化采摘设备,但其精度较低。Redmon等[21]提出的YOLOv2算法,通过引入锚框和联合训练提升了精度,但在复杂场景和小目标检测中仍存在误检。为了进一步提升多尺度特征提取能力,Redmon 等[22]提出了YOLOv3 算法,采用更深的DarkNet-53 骨干网络,并结合特征金字塔网络(Feature Pyramid Networks,FPN)[23]进行特征融合,显著增强了对不同尺度目标的检测能力。Bochkovskiy等[24]提出的YOLOv4算法,引入Mosaic数据增强、CSPDarkNet-53 骨干网络和SPP 模块,提升了复杂背景和遮挡情况下的精度,同时保持实时性。在YOLOv4 取得成功的基础上,研究者进一步推出了YOLOv5算法[25],YOLOv5通过自适应锚框、Focus 模块和轻量化设计,更适合资源受限的设备。Li等[26]提出的YOLOv6 算法取消了锚框,采用EifficientRep和Rep-PAN模块,虽然提高了检测精度,但其复杂的结构不适合部署在资源受限的移动采摘设备上。Wang 等[27]提出了YOLOv7 算法,通过BConv、E-ELAN 和MPConv 层优化特征提取,不依赖锚框,提升了硬件的适应性。Ultraytics 公司提出了YOLOv8 算法[28],YOLOv8 采用C2F 模块和解耦头,进一步提升了检测速度和精度,适应多场景需求。YOLO 系列算法经过长期演变,其核心优化始终围绕速度与精度的平衡展开。YOLO以实时性为目标,在保持高检测精度的同时,能够满足各类应用场景对快速响应的需求(如表1 罗列的各类YOLO算法改进点及目标),而这正是自动化水果采摘领域研究的关键所在。因此,YOLO 成为果实识别任务中应用最广泛的目标检测算法。随着技术的发展,许多基于YOLO 的优化模型不断涌现,为提高识别精度和实时性能提供了更多有效的解决方案。

表1 各类YOLO 算法改进点及目标

Table 1 Improvement and objectives of various Yolo algorithms

模型Model YOLOv1骨干网络Backbone network GoogLeNet YOLOv2 Darknet-19 YOLOv3 Darknet-53 YOLOv4 CSPDarknet53 YOLOv5 CSPDarknet-53 YOLOv6 EfficientRep YOLOv7 E-ELAN YOLOv8 CSPDarkNet-53改进点及目标Improvements and objectives改进点:将目标检测任务视为回归问题,使用全局图像特征预测边界框。目标:实现快速检测,适用于实时性强的场景,但精度有限。Improvement:Treats object detection as a regression problem, using global image features to predict bounding boxes.Objective:Achieves fast detection,suitable for real-time scenarios,but with limited accuracy.改进点:引入批量归一化、多尺度训练和锚框。目标:提升模型精度,保持较高的响应速度。Improvement:Introduced batch normalization,multi-scale training,and anchor boxes.Objective:Enhance model accuracy while maintaining a high response speed.改进点:多尺度检测和FPN特征融合。目标:增强对不同尺度目标的检测能力,适应复杂背景,同时保持快速响应。Improvement:Multi-scale detection and feature fusion using FPN.Objective:Enhance the detection capability for objects of different scales, adapt to complex backgrounds, while maintaining fast response.改进点:引入CSP结构减少计算量,Mish激活函数,集成多种数据增强技术提高鲁棒性。目标:在提高精度的同时,保持实时性,尤其适用于复杂背景。Improvement:Introduced CSP structure to reduce computational load, Mish activation function, and integrated various data augmentation techniques to enhance robustness.Objective:Maintain real-time performance while improving accuracy, particularly suitable for applications in complex backgrounds.改进点:Focus结构、自适应锚框和图像缩放,优化网络架构,模型轻量化。目标:提高模型灵活性和效率,保证精度的同时,进一步提升轻量化,适合嵌入式和移动设备部署。Improvement:Focus structure,adaptive anchor boxes,and image scaling,optimizing the network architecture for model light weighting.Objective:Increase model flexibility and efficiency, ensuring accuracy while further enhancing lightweight design,suitable for deployment on embedded and mobile devices.改进点:通过PAN拓扑结构整合多尺度特征。目标:针对多尺寸目标进行优化,但复杂性增加。Improvement:Integrated multi-scale features through PAN topology.Objective:Optimize for targets of various sizes,but increased complexity.改进点:引入新的模型缩放策略,确保模型能在不同硬件平台有效缩放,通过改进网络架构的层聚合方法提高特征提取的效率和效果。目标:提高模型在不同硬件平台上的性能,同时保持精度。Improvement:Introduced new model scaling strategies to ensure effective scaling across different hardware platforms,and improved feature extraction efficiency and effectiveness through enhanced layer aggregation methods in the network architecture.Objective:Enhance model performance on various hardware platforms while maintaining accuracy.改进点:骨干网络采用C2F模块,解耦头分离分类与定位,删除object分支,提升检测速度与准确性。目标:进一步提升速度与精度,适应多种应用场景。Improvement:The backbone network employs the C2F module,decoupled heads separate classification from localization,eliminating the object branch to enhance detection speed and accuracy.Objective:Further improve speed and precision,suitable for various application scenarios.,

在复杂的自然环境中,柑橘早期果实与背景的枝叶颜色相近,传统算法很难精确识别果实,常出现把绿色枝叶背景错误识别为果实以及漏检的情况。为解决上述类似问题,宋中山等[29]提出D-YOLOv3算法,即采用密集连接卷积网络(Densely Connected Convolutional Networks,DenseNet)[30],加强特征传播,实现特征的复用。在构建数据集时,宋中山等采集不同天气状况的柑橘图片,对图片进行高斯模糊、色彩平衡等处理,提高了数据集的多样性,有效提高了模型的泛化能力与鲁棒性,实验表明D-YOLOv3对柑橘早期果实的识别精确率达83.0%。吕强等[31]基于YOLOv5s优化改进,提出了柑橘早期果实的检测算法YOLO-GC。针对模型精度低、模型大的问题,将骨干网络换为轻量级GhostNet,嵌入全局注意力机制(Global Attention Mechanism,GAM)[32]以提升提取果实特征的能力。为改善枝叶遮挡、重叠造成的漏检问题,YOLO-GC 采用GIoU 损失函数、结合非极大值抑制(Soft-Non-Maximum Suppression,Soft-NMS)[33]算法优化边界框的回归机制,最终实验表明,YOLO-GC 与YOLOv5s 相比,权重文件大小减少了53.9%,仅占用6.69 MB,平均精度提高1.2%达到了97.8%。在边缘设备端对绿色柑橘果实检测时,实验推理仅用时108 ms。帖军等[34]提出一种基于混合注意力机制和YOLOv5 模型改进的柑橘识别方法YOLOv5-SC。在骨干网络嵌入SE[35]注意力与CA[36]注意力,使网络不仅能捕获方向和位置信息,也能捕获通道信息,让模型更好提取、定位柑橘的图像特征。YOLOv5-SC 引入Varifocal Loss[37]作为损失函数,能够更加平衡正负样本的损失。实验表明,YOLOv5-SC 的平均精度达到了95.1%,改善了将绿色背景误检成绿色柑橘果实的问题。

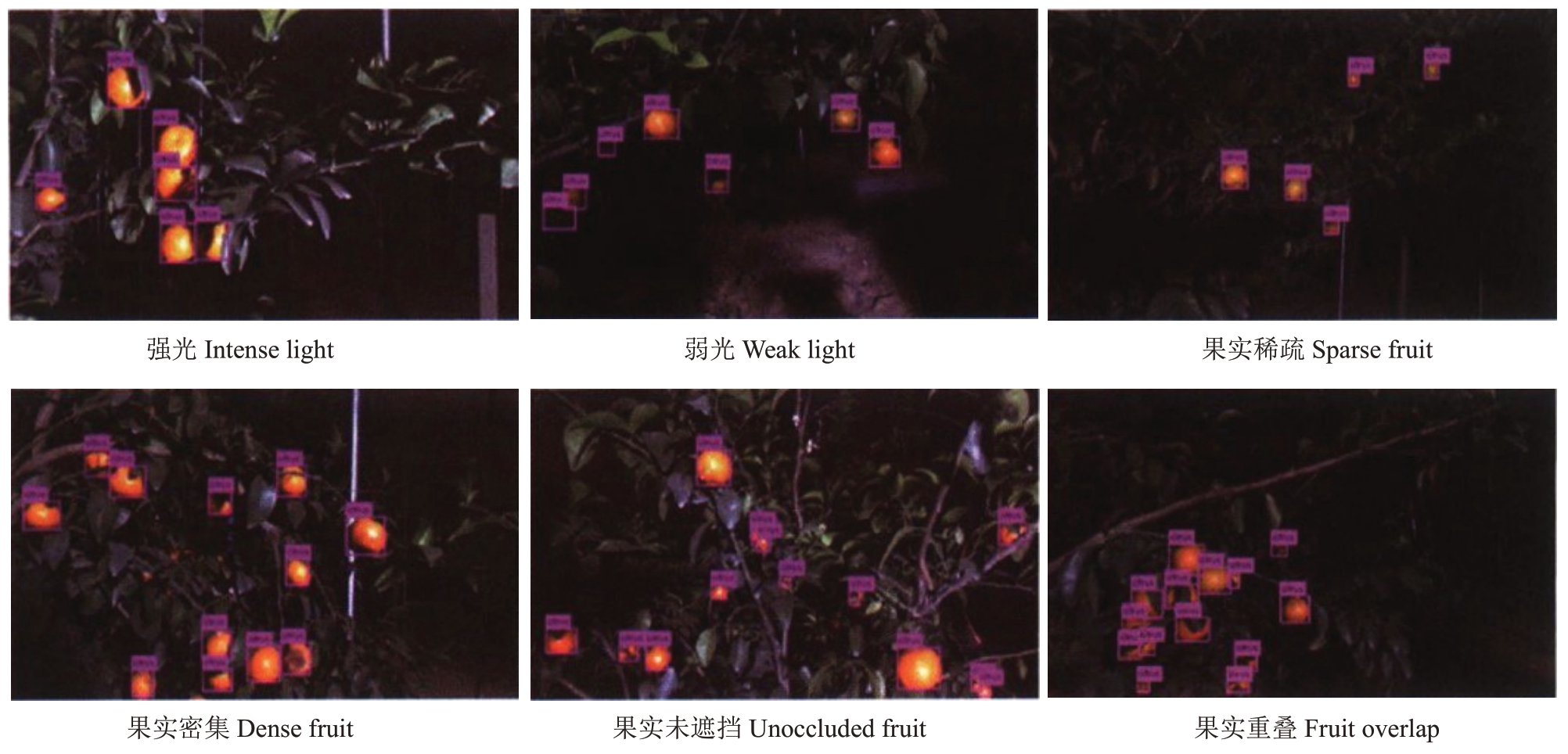

在自然环境下,类球状水果往往以各种姿态分布在果树上,对果园这类远距离、大视场的场景进行识别时,树叶遮挡、果实目标较小或果实密集分布等因素均会导致目标检测算法在识别过程中出现漏检或误检的情况。为了解决此类问题,马帅等[38]提出基于YOLOv4 改进的梨果实识别算法,将SPP 模块中的最大池化法改为平均池化法,更多地保留目标信息,解决了漏检和误检的问题。另外,该算法将SPP 模块前后的卷积、PANet 中的部分卷积以及输出部分的卷积替换为深度可分离卷积,即在保证卷积效果不变的情况下减少了模型所占的空间。使用训练后的改进YOLOv4 模型对新获取的图像样本进行测试,改进后的模型权重文件大小为136 MB,平均精度达到90.2%。刘忠意等[39]对YOLOv5 进行改进,提出了一种橙子果实的识别算法。将骨干网络部分C3模块替换为RepVGG模块,加强特征提取能力,将颈部网络中的普通卷积替换成鬼影混洗卷积(Ghost Shuffle Convolution,GConv)[40],在保证精度的同时也降低了模型参数量。为提高定位目标信息的准确率,该算法在预测头前加入了高通道注意力机制(Efficient Channel Attention,ECA)[41],最后经实验证明,改进后的算法对橙子检测的平均精度达到90.1%,误检漏检的问题被有效解决,检测效果如图1所示。该算法在无遮挡、复杂光照、枝叶遮挡及密集小目标场景下均展现了良好的检测效果,具备较强的鲁棒性和泛化能力。

图1 基于YOLOv5 改进的算法检测效果图

Fig.1 Detection effect of improved algorithm based on YOLOv5

贺英豪等[42]设计了一种基于YOLOv5s 的改进算法,有效提升了对李识别的准确率,该算法骨干网络中的下采样卷积被替换成FM 模块,保证模型下采样时不丢失严重遮挡目标和小目标的特征信息,使用focal loss和交叉熵函数的加权损失作为分类损失,提升密集目标的识别能力,最后测试模型性能发现平均精度提高了2.8%,达到97.6%,小目标识别的平均精度达到92.0%。为实现对柑橘果实的精确识别,黄彤镔等[43]提出一种基于YOLOv5 改进模型的识别方法。该算法通过引入卷积注意力模块(Convolutional Block Attention Module,CBAM)[44]提高网络的特征提取能力,缓解遮挡目标与小目标的漏检问题,利用Alpha-IoU[45]损失函数代替GIoU 损失函数作为边界框回归损失函数,提高边界框定位的精度。最后结果显示该模型的平均精度达到91.3%,对单张柑橘果实图像的检测时间为16.7 ms。苑迎春等[46]提出基于改进YOLOv4-Tiny 的果园环境下桃的实时识别算法,YOLOv4-Tiny-Peach 在骨干网络引入CBAM,颈部网络添加大尺度浅层特征层,提高小目标识别精度,采用双向特征金字塔网络(Bidirectional Feature Pyramid Network,BiFPN)[47]对不同尺度特征进行融合。通过训练,YOLOv4-Tiny-Peach 平均精度达87.9%,与YOLOv4-Tiny 相比,在大视场和早期桃子识别场景下该模型检测效果提升更明显。为提升全天候自动化采摘设备在夜间环境中的视觉检测能力,熊俊涛等[48]提出Des-YOLOv3算法,借鉴ResNet[49]与DenseNet,实现对多层特征的复用、融合,加强了夜间环境下算法对小目标、重叠遮挡果实识别的鲁棒性,检测效果如图2所示,实验表明Des-YOLOv3 平均精度达97.7%。此后,熊俊涛等[50]再次针对夜间采摘作业,提出基于YOLOv5s改进和主动光源结合的柑橘识别算法BI-YOLOv5s,即利用BiFPN 进行多尺度交叉连接和加权特征融合,引入CA注意力加强定位信息提取,采用C3TR模块减少计算量并提取全局信息。实验后发现,在光源色环境下,该模型对夜间柑橘识别准确率达95.3%,实现了全天候自动化采摘作业。余圣新等[51]利用全维动态卷积替换YOLOv8系列模型中的部分普通卷积以提高YOLOv8 系列的鲁棒性,并将损失函数替换为MPDIoU[52],解决了原本CIoU 损失函数退化的问题。通过实验验证,改进后的YOLOv8n、YOLOv8s、YOLOv8m、YOLOv8l、YOLOv8x模型的平均精度分别提高至88.3%、89.3%、89.6%、89.9%、90.1%。岳有军等[53]基于YOLOv8 设计了一个新的特征融合网络Rep-YOLOv8实现高层语义和低层空间特征融合。通过集成EMA 注意力模块到YOLOv8中,抑制背景和枝叶遮挡等一般特征信息,使模型更关注果实区域。最后,将C2f 模块替换为三支路DWR 模块,通过多尺度特征融合提高小目标检测能力,使用Inner-SIoU[54]损失函数提高模型精度。在果园环境中,以苹果作为检测对象,进行不同果实数量、不同成熟度的实验对比。实验结果表明,该算法平均精度达到94.0%,在成熟果实大视场的识别场景下,改进后算法的各项指标均有显著提升,为果实识别任务提供有效支持。

图2 Des-YOLOv3 算法检测效果图

Fig.2 Detection effect of Des-YOLOv3 algorithm

目标检测算法通常包含庞大的参数量和复杂的网络结构,将这些模型部署到嵌入式平台时,有限的计算资源会严重限制模型的实时响应速度。为解决这个问题,吕石磊等[55]提出基于YOLOv3 改进的轻量化柑橘识别方法YOLO-LITE,使用MObileNet-v2作为骨干网络,便于部署到移动终端,并引入GIoU[56]边框回归损失函数。最终实验表明,YOLOLITE对柑橘目标检测速度可以达到246帧·s-1,权重文件大小为28 MB。王卓等[57]以YOLOv4算法为基础提出轻量级苹果实时检测算法YOLOv4-CA,使用轻量级网络MobileNet-v3 作为特征提取网络,并将SE注意力模块集成其中作为颈部基本块,提高网络对特征通道的敏感程度,增强特征提取能力。为有效压缩模型参数量和计算量,王卓等将特征融合网络的普通卷积全部换为深度可分离卷积。最终实验表明该算法平均检测精度达到92.2%,在嵌入式平台检测速度为15.11 帧·s-1,内存占用量54.1 MB,在保证精度的同时也可满足对采摘机器人实时性的需求。曾俊等[58]提出利用YOLO-Faster算法对桃进行实时快速检测,在YOLOv5s基础上将骨干网络替换为FasterNet[59],引入部分卷积(Partial Convolution,PConv)[60]有效减少计算冗余和内存访问,模型检测速度提升,变得更加轻量化。在骨干网络和颈部网络之间,增加串联的卷积注意力模块和常规卷积模块,强化骨干网络和颈部网络之间的特征融合和特征提取能力,提高检测的准确性。采用SIoU[61]作为损失函数,解决预测框与真实框之间不匹配的问题,更好地衡量预测框和真实框之间的匹配程度,提高检测结果的质量。经过自建数据集的训练和嵌入式设备Jetson Nano上的部署,该算法平均精度达到了88.6%,权重文件大小为8.3 MB,相较于YOLOv5s,平均精度提升了1%。赵辉等[62]提出基于YOLOv3 改进的苹果识别算法,将DarkNet-53 网络残差模块与CSP 模块[63]结合进而降低网络计算量,通过加入SPP模块将全局、局部特征融合,提高小目标召回率。采用SoftNMS 算法增强重叠遮挡果实的识别能力。改进后算法的平均精度达到96.3%,相较于YOLOv3提高了3.8%,满足了苹果自动采摘识别准确性和实时性的要求。然而,当光线不足或果实表面纹理特征不明显时,算法的准确率可能会受到影响。Yan 等[64]对YOLOv5S 算法进行了优化改进,提高了模型表达能力和空间信息损失处理能力,使其更适合部署在嵌入式设备上。首先,将模型骨干网络的BottleneckCSP 模块桥分支上的卷积层移除,把BottleneckCSP模块输入特征映射与另一个分支的输出特征映射直接进行深度连接,减少模块中的参数数量。其次,将SE注意力嵌入到网络模型中,通过学习自动获得一种新的特征重新校准策略,有效提高了模型的表达能力。最后,将下层感知视野较大的特征提取层输出与位于中等大小目标检测层之前的特征提取层输出进行融合,以弥补因高层特征分辨率低造成的空间信息损失,检测效果如图3 所示。王乙涵[65]致力于完成精确且高效的柑橘识别采摘任务,为此构建了适用于采摘机器人的轻量化目标检测模型LT-YOLOv7,以解决YOLOv7模型存储空间需求高、不适合移动终端等问题。采用RepVGG[66]作为骨干网络,将其得到的多尺度特征图与YOLOv7 的颈部网络进行多尺度特征拼接,以保留全局特征并降低整体网络的计算量。颈部网络引入深度可分离卷积,以减少参数量、节省内存并提高模型精度。此外,通过引入ECA 增强特征表示,提升目标判别能力,降低叶片、枝干等因素对目标识别的干扰。在预测阶段,模型采用soft DIoU_NMS算法进行目标预测框的筛选,以优化对重叠物体的识别能力,优化后的LT-YOLOv7模型对重叠遮挡柑橘果实检测的平均精度达到了97.0%,如图4 所示,即使在果实被遮挡的情况下,该算法仍然能够获得良好的检测效果。

图3 基于YOLOv5s 改进算法的晴天和阴天检测效果

Fig.3 Detection effect of the improved YOLOv5s algorithm on sunny and cloudy days

图4 LT-YOLOv7 算法检测效果图

Fig.4 Detection effect of LT-YOLOv7 algorithm

Yang等[67]针对苹果果实密度高、重叠、网络模型参数化问题,提出了MobileOne-YOLOv7 算法。MobileOne-YOLOv7 采用多尺度特征提取方法,构建特征金字塔输入模型。多尺度训练提高了模型的鲁棒性,避免多尺度特征提取过程中的计算过多问题。将骨干网络的最后一个ELAN模块替换为MobileOne 模块,增强模型的非线性和表示能力。同时,还将SPPCSPC 模块更改为SPPFCSPC模块,将串行通道变为并行通道,在保证感受野不变的情况下加快特征融合速度。此外,在颈部网络增加了一个预测头,提高了对不同尺度物体的检测精度。通过引入可重参数化的分支,训练时增加模型容量,推理时简化结构,降低内存访问成本。张震等[68]提出基于YOLOv7 改进的轻量化苹果识别算法,将多分支堆叠模块中的部分普通卷积换成PConv,以减少模型的参数量和计算量。同时,该算法加入ECA 解决遮挡目标的错检漏检问题,保证了模型的精度平衡。在模型训练过程中,该算法采用了基于麻雀搜索算法(Sparrow Search Algorithm,SSA)[69]的学习率优化策略,显著提高了模型的检测精度,实验表明模型的平均精度达到了97.0%,模型参数量和计算量分别降低了22.9%、27.4%,适合部署在嵌入式设备中。

2.2 两阶段目标检测算法

Girshick 等[70]提出了R-CNN 算法,R-CNN 利用区域建议网络提取大约2000个自上而下独立于类别的区域建议。通过大型CNN 计算这些区域的固定长度特征,使用线性支持向量机(Support Vector Machines,SVM)[71]对这些特征进行分类,确定每个区域是否包含特定的目标类别。He 等[72]提出的SPP-Net 算法改进了R-CNN,使其能够处理任意比例的图像。通过金字塔池化,利用SPP-Net 提取不同尺度的特征并整合,生成固定长度的输出。与R-CNN相比,SPP-Net无需处理所有候选区域,只需输入整张图像即可获得特征图,直接从中提取感兴趣区域的特征,减少冗余计算并提高了速度。Girshick[73]在SPPNet 的基础上提出了Fast R-CNN 算法。Fast R-CNN将整个对象建议与整张图像作为输入,通过多个卷积和最大池化层生成特征图。通过一次卷积操作解决了多次卷积产生的冗余问题。Fast R-CNN 利用感兴趣区域(Region Of Interest,ROI)池化层从特征图中获取固定长度的特征向量,然后通过全连接层进行处理,最终分为分类和回归两个输出层。

Ren等[74]提出的Faster R-CNN算法摒弃传统的选择性搜索算法,引入了RPN。RPN通过滑动窗口生成不同尺寸的锚框,并根据设定的阈值对其进行正负判断,输出候选边界框及概率数据。这些候选区域经过ROI 池化层操作后,被映射为固定大小的特征图,然后通过全连接层进行物体类别判断和位置精确定位。Dai 等[75]提出了基于区域的R-FCN 算法,由共享的全卷积结构组成。R-FCN 生成位置敏感分数图作为输出,编码了相对空间位置信息,其ROI 池化层从分数图中提取信息。Cai 等[76]提出了Cascade R-CNN算法,包括提议建立子网络和ROI检测子网络。Cascade R-CNN 利用级联边界框回归将回归任务分解,每一步骤都使用专门回归器。通过级联回归作为重采样机制,解决初始假设分布严重偏向低质量的问题。其中,Faster R-CNN 是第一个实现端到端的基于深度学习的目标检测算法。

在两阶段目标检测算法的基础上,研究者优化检测模型,提出高效准确的类球状水果目标检测算法应用于自动化采摘作业当中。任会等[77]利用果园内采集的柑橘果实图像,通过实验比较传统检测算法和Faster R-CNN对柑橘果实的识别效果,实验发现传统检测算法在增强预处理且果实无遮挡的情况下,识别效果要优于Faster R-CNN,但当果实重叠或遮挡时,则Faster R-CNN 识别效果更优。Wan 等[78]提出了一种基于Faster R-CNN 改进的多类水果检测框架。骨干网络为VGG-16,包含13个卷积层、13个ReLu 层和4 个池化层。为避免因样本较少训练出现过拟合问题、平衡模型的复杂度和数据量,该算法通过正则化对高位参数进行权值衰减,增加两个损失函数优化卷积层和池化层参数,根据拍摄角度自动调整保证每个卷积层的大小以及核参数的合理性,提高检测精度。Liu 等[79]提出基于R-FCN 改进的水果识别定位算法,由RPN和FCN组成。RPN用于生成候选区域框,FCN 用于像素级特征提取,通过反卷积可视化检测结果。黄磊磊等[80]为解决算法识别遮挡重叠柑橘果实精度低的问题,提出基于深度学习的重叠柑橘分割与形态复原算法,引入Pointrend 分支的Mask R-CNN,实现对柑橘的识别及边缘细化的实例分割,采用编解码器结构的U-Net 作为主体网络提出形态粗复原模型,设计局部惩罚损失函数及交并比形状损失函数,通过机器视觉方法根据粗复原结果提取ROI,最后利用基于PConv 的形态精复原模型完成果实的形态复原。采用该方法对柑橘果实识别的平均精度达93.7%,分割精确度达96.3%。荆伟斌等[81]针对苹果园果实产量预估提出了一种基于不同特征网络的苹果树侧面果实识别方法。研究人员通过采集果园内苹果树的侧视图,测试不同特征提取网络与Faster R-CNN 模型结合的识别效果。实验中,分别选用了VGG-16 和ResNet-50作为特征提取网络,对两个Faster R-CNN模型进行训练。结果显示,虽然两者使用了相同的学习参数,VGG-16 作为特征网络的Faster R-CNN模型在各项指标上优于ResNet-50,识别精度达91.0%,单幅图像的推理时间为1.4 s。贾艳平等[82]利用相机采集自然环境中不同水果的RGB 图像,在Faster R-CNN中添加似然函数和正则化函数保证卷积层的大小和核参数在合理范围内,对不同水果进行识别测试,整体识别准确率达99.7%,其中,对橙子的识别准确率为77.3%。Lu 等[83]利用相机采集果园内绿色柑橘果实的图像,采用深浅层特征融合策略增加Mask R-CNN 骨干网络每一阶段提取的特征信息,通过引入骨干网络之间的组合连接块、减少通道数并提高模型精度,改进后的Mask RCNN 在识别绿色柑橘果实的平均精度达95.4%,比原模型提高了1.4%。Min 等[84]为了聚合CNN 不同层次的注意力特征,设计了多尺度注意力网络(Multi Scale Attention Network,MSANet) 。MSANet 引入混合注意力机制,能将空间通道注意力和不同层的多个注意力特征聚合到最终的统一表示,使最终表示更具鲁棒性、全面性。

3 总结及未来发展趋势

3.1 总结

本文对水果采摘领域中表现优异的检测算法进行了综述,重点分析了传统目标检测算法和基于深度学习的目标检测算法。针对类球状水果识别任务的传统目标检测算法依赖手工设计特征,通过明确的规则提取,使得算法的各个步骤具备高度的可解释性。传统算法对数据需求较少,仅需少量标记数据就能实现模型的调试和优化,且无需复杂的深度神经网络运算,计算复杂度相对较低,对计算资源的要求不高。然而,在自然环境中,传统水果检测算法在处理果实重叠、光照变化和枝条遮挡等复杂场景时,往往难以准确地提取有效信息。在更换识别水果种类时,可能需要人工更改算法,缺乏良好的泛化能力。

相比之下,基于深度学习的目标检测算法采用多层级的神经网络架构,研究人员可以通过对网络内模块的调整,增强特征表达能力、减少模型参数量和提升图像推理速度等。深度神经网络架构的优势在于,能通过大规模数据的学习,自主提取复杂且抽象的多层次特征,并通过层次化特征学习逐步捕捉从低级到高级的语义信息,提高模型在面对复杂目标和多变环境时的检测精度和鲁棒性。

在类球状水果的识别任务中,研究人员通过引入轻量级特征提取网络(如MobileNet、GhostNet)和不同注意力机制模块(如SE、CA和CBAM),降低计算成本和内存占用,使得这些算法更适合在资源有限的嵌入式设备上运行。同时,这些改进增强了模型对关键特征的敏感性,使网络能够更精准地捕捉到目标对象的关键特征,抑制一般特征信息,从而提高检测精度,提升算法在复杂环境中的表现能力,解决由于背景复杂性导致的误检和漏检问题。此外,研究人员还运用多种先进的损失函数(如SIoU、Alpha-IoU损失等),平衡了正负样本的影响,提高边界框的回归精度。

3.2 发展趋势

近年来,目标检测算法在类球状水果识别任务方面有广泛应用,在遮挡重叠果实、产量预测、水果分类分级和表面缺陷等复杂检测任务中展现出优越的性能。但是,由于果园环境条件复杂多变,现有的类球状水果目标检测算法识别能力的普适性仍有待提高。根据类球状水果目标检测算法的发展趋势分析,未来的研究可以重点集中在以下几个方向:

(1)模型优化:基于深度学习的目标检测算法需要根据果实识别需求不断改进,可以通过引入注意力机制、改变特征提取网络结构、优化损失函数和调整网络深度、宽度等方法,提高果实目标识别的准确率、加快识别速度以及降低漏检误检率。参考其他领域大模型,研究具有优异表现的模型是否可以经过调整用于果实目标识别,进一步提高识别的准确率和效率。

(2)数据集构建与扩增:根据不同采摘任务需求,收集各个生长阶段和不同品种的类球状水果图像,构建一个包含不同天气、光照条件(顺光、逆光)、果实重叠程度以及遮挡情况的数据集。结合图像处理方法(如图像旋转、翻转、裁剪、缩放、加噪声、色彩变换等)或生成对抗网络(Generative Adversarial Network,GAN)[85]的图像生成技术进行数据扩增。利用多样化的数据集进行训练,可以增强模型的泛化能力和鲁棒性[86]。

(3)多模态数据结合:为了进一步提升类球状水果识别的精度与适应性,未来研究可以结合激光雷达、深度相机所获取的三维信息[87],更全面地获取果实形态和位置信息,特别是在果实被严重遮挡或在光照条件极差的情况下,多模态数据有助于增强模型的鲁棒性。

[1] 刘袁,黄彪,陈昌银,杨文达,张华东,杨涛.水果采摘机器人采摘装置机研究现状[J].农业科学,2021,11(2):129-132.LIU Yuan,HUANG Biao,CHEN Changyin,YANG Wenda,ZHANG Huadong,YANG Tao. Research status of fruit picking robot picking device[J]. Journal of Agricultural Sciences,2021,11(2):129-132.

[2] 戴军.机器视觉技术在瓜菜检测应用中的研究进展[J].中国瓜菜,2023,36(11):1-9.DAI Jun. Research progress of machine vision technology in the detection of cucurbits and vegetables[J]. China Cucurbits and Vegetables,2023,36(11):1-9.

[3] 吴剑桥,范圣哲,贡亮,苑进,周强,刘成良.果蔬采摘机器手系统设计与控制技术研究现状和发展趋势[J].智慧农业(中英文),2020,2(4):17-40.WU Jianqiao,FAN Shengzhe,GONG Liang,YUAN Jin,ZHOU Qiang,LIU Chengliang.Research status and development direction of design and control technology of fruit and vegetable picking robot system[J].Smart Agriculture,2020,2(4):17-40.

[4] 初广丽,张伟,王延杰,丁南南,刘艳滢.基于机器视觉的水果采摘机器人目标识别方法[J].中国农机化学报,2018,39(2):83-88.CHU Guangli,ZHANG Wei,WANG Yanjie,DING Nannan,LIU Yanying. A method of fruit picking robot target identification based on machine vision[J]. Journal of Chinese Agricultural Mechanization,2018,39(2):83-88.

[5] 杨健,杨啸治,熊串,刘力.基于改进YOLOv5 的番茄果实识别估产方法[J].中国瓜菜,2024,37(6):61-68.YANG Jian,YANG Xiaozhi,XIONG Chuan,LIU Li. An improved YOLOv5-based method for tomato fruit identification and yield estimation[J]. China Cucurbits and Vegetables,2024,37(6):61-68.

[6] DENG J,XUAN X J,WANG W F,LI Z,YAO H W,WANG Z Q.A review of research on object detection based on deep learning[J]. Journal of Physics:Conference Series,2020,1684(1):012028.

[7] DU L X,ZHANG R Y,WANG X T. Overview of two-stage object detection algorithms[C]//Journal of Physics:Conference Series.IOP Publishing,2020,1544(1):012033.

[8] 蒋焕煜,彭永石,申川,应义斌.基于双目立体视觉技术的成熟番茄识别与定位[J].农业工程学报,2008,24(8):279-283.JIANG Huanyu,PENG Yongshi,SHEN Chuan,YING Yibin.Recognizing and locating ripe tomatoes based on binocular stereovision technology[J]. Transactions of the Chinese Society of Agricultural Engineering,2008,24(8):279-283.

[9] LIU X Y,ZHAO D A,JIA W K,JI W,SUN Y P. A detection method for apple fruits based on color and shape features[J].IEEE Access,2019,7:67923-67933.

[10] 夏康利,何强.基于颜色统计的水果采摘机器人水果识别的研究[J].南方农机,2022,53(24):11-16.XIA Kangli,HE Qiang. Research on fruit recognition for fruitpicking robots based on color statistics[J]. China Southern Agricultural Machinery,2022,53(24):11-16.

[11] COMANICIU D,MEER P. Mean shift:A robust approach toward feature space analysis[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence,2002,24(5):603-619.

[12] 邹伟.基于机器视觉技术的柑橘果实成熟度分选研究[J].农业与技术,2023,43(17):41-44.ZOU Wei.Research on citrus fruit maturity sorting based on machine vision technology[J]. Agriculture and Technology,2023,43(17):41-44.

[13] 陈雪鑫,卜庆凯.基于多颜色和局部纹理的水果识别算法研究[J].青岛大学学报(工程技术版),2019,34(3):52-58.CHEN Xuexin,BU Qingkai. Research on fruit recognition algorithm based on multi-color and local texture[J].Journal of Qingdao University (Engineering & Technology Edition),2019,34(3):52-58.

[14] 徐惠荣,叶尊忠,应义斌. 基于彩色信息的树上柑橘识别研究[J].农业工程学报,2005,21(5):98-101.XU Huirong,YE Zunzhong,YING Yibin. Identification of citrus fruit in a tree canopy using color information[J]. Transactions of the Chinese Society of Agricultural Engineering,2005,21(5):98-101.

[15] FAN Q,ZHUO W,TANG C K,TAI Y W.Few-shot object detection with attention-RPN and multi-relation detector[C]// 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR).Seattle,WA,USA:IEEE,2020:4012-4021.

[16] LIU W,ANGUELOV D,ERHAN D,SZEGEDY C,REED S,FU C Y,BERG A C. SSD:Single shot MultiBox detector[C]//Computer Vision-ECCV 2016:14th European Conference.Amsterdam,The Netherlands:Springer International Publishing,2016:21-37.

[17] HOWARD A G,ZHU M L,CHEN B,KALENICHENKO D,WANG W J,WEYAND T,ANDREETTO M,ADAM H,HEATON J. MobileNets:Efficient convolutional neural networks for mobile vision applications[EB/OL]. 2017:1704.04861. https://arxiv.org/abs/1704.04861v1.

[18] ZHANG X Y,ZHOU X Y,LIN M X,SUN J.ShuffleNet:An extremely efficient convolutional neural network for mobile devices[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City,UT,USA:IEEE,2018:6848-6856.

[19] LIU Z,LIN Y T,CAO Y,HU H,WEI Y X,ZHANG Z,LIN S,GUO B N.Swin transformer:Hierarchical vision transformer using shifted windows[C]//2021 IEEE/CVF International Conference on Computer Vision (ICCV). Montreal,QC,Canada:IEEE,2021:9992-10002.

[20] REDMON J,DIVVALA S,GIRSHICK R,FARHADI A. You only look once:Unified,real-time object detection[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition(CVPR).Las Vegas,NV,USA:IEEE,2016:779-788.

[21] REDMON J,FARHADI A. YOLO9000:Better,faster,stronger[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu,HI,USA:IEEE,2017:6517-6525.

[22] REDMON J,FARHADI A. YOLOv3:An incremental improvement[EB/OL]. 2018:1804.02767. https://arxiv.org/abs/1804.02-767v1.

[23] LIN T Y,DOLLÁR P,GIRSHICK R,HE K M,HARIHARAN B,BELONGIE S.Feature pyramid networks for object detection[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). July 21-26,2017,Honolulu,HI,USA.IEEE,2017:936-944.

[24] BOCHKOVSKIY A,WANG C Y,LIAO H Y M.YOLOv4:Optimal speed and accuracy of object detection[EB/OL]. 2020:2004.10934.https://arxiv.org/abs/2004.10934v1

[25] JOCHER G.YOLOv5 by Ultralytics(Version7.0)Computersoftware[CP].2020,https://doi.org/10.5281/zenodo.3908559.

[26] LI C Y,LI L L,JIANG H L,WENG K H,GENG Y F,LI L,KE Z D,LI Q Y,CHENG M,NIE W Q,LI Y D,ZHANG B,LIANG Y F,ZHOU L Y,XU X M,CHU X X,WEI X M,WEI X L. YOLOv6:A single-stage object detection framework for industrial applications[EB/OL]. arxiv preprint arxiv,2022:2209.02976.

[27] WANG C Y,BOCHKOVSKIY A,LIAO H Y M. YOLOv7:Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors[C]//2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver,BC,Canada:IEEE,2023:7464-7475.

[28] VARGHESE R,M S. YOLOv8:A novel object detection algorithm with enhanced performance and robustness[C]//2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS). Chennai,India:IEEE,2024:1-6.

[29] 宋中山,刘越,郑禄,帖军,汪进.基于改进YOLOV3 的自然环境下绿色柑橘的识别算法[J].中国农机化学报,2021,42(11):159-165.SONG Zhongshan,LIU Yue,ZHENG Lu,TIE Jun,WANG Jin.Identification of green citrus based on improved YOLOV3 in natural environment[J]. Journal of Chinese Agricultural Mechanization,2021,42(11):159-165.

[30] HUANG G,LIU Z,VAN DER MAATEN L,WEINBERGER K Q. Densely connected convolutional networks[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition(CVPR).Honolulu,HI,USA:IEEE,2017:2261-2269.

[31] 吕强,林刚,蒋杰,王明之,张皓杨,易时来. 基于改进YOLOv5s 模型的自然场景中绿色柑橘果实检测[J].农业工程学报,2024,40(18):147-154.LÜ Qiang,LIN Gang,JIANG Jie,WANG Mingzhi,ZHANG Haoyang,YI Shilai. Detecting green citrus fruit in natural scenes using improved YOLOv5s model[J]. Transactions of the Chinese Society of Agricultural Engineering,2024,40(18):147-154.

[32] LIU Y C,SHAO Z R,HOFFMANN N.Global attention mechanism:Retain information to enhance channel-spatial interactions[EB/OL].2021:2112.05561.https://arxiv.org/abs/2112.05561v1

[33] BODLA N,SINGH B,CHELLAPPA R,DAVIS L S.Soft-NMSimproving object detection with one line of code[C]//2017 IEEE International Conference on Computer Vision (ICCV). Venice:IEEE,2017:5561-5569.

[34] 帖军,赵捷,郑禄,吴立锋,洪博文.改进YOLOv5 模型在自然环境下柑橘识别的应用[J].中国农业科技导报,2024,26(7):111-120.TIE Jun,ZHAO Jie,ZHENG Lu,WU Lifeng,HONG Bowen.Application of improved YOLOv5 model in citrus recognition in natural environment[J]. Journal of Agricultural Science and Technology,2024,26(7):111-120.

[35] HU J,SHEN L,SUN G. Squeeze-and-excitation networks[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition.Salt Lake City,UT,USA:IEEE,2018:7132-7141.

[36] HOU Q B,ZHOU D Q,FENG J S.Coordinate attention for efficient mobile network design[C]//2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville,TN,USA:IEEE,2021:13708-13717.

[37] ZHANG H Y,WANG Y,DAYOUB F,SÜNDERHAUF N.VarifocalNet:An IoU-aware dense object detector[C]//2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR).Nashville,TN,USA:IEEE,2021:8510-8519.

[38] 马帅,张艳,周桂红,刘博.基于改进YOLOv4 模型的自然环境下梨果实识别[J].河北农业大学学报,2022,45(3):105-111.MA Shuai,ZHANG Yan,ZHOU Guihong,LIU Bo.Recognition of pear fruit under natural environment using an improved YOLOv4 model[J].Journal of Hebei Agricultural University,2022,45(3):105-111.

[39] 刘忠意,魏登峰,李萌,周绍发,鲁力,董雨雪.基于改进YOLOv5 的橙子果实识别方法[J].江苏农业科学,2023,51(19):173-181.LIU Zhongyi,WEI Dengfen,LI Meng,ZHOU Shaofa,LU Li,DONG Yuxue. Orange fruit recognition method based on improved YOLOv5[J]. Jiangsu Agricultural Sciences,2023,51(19):173-181.

[40] HAN K,WANG Y H,TIAN Q,GUO J Y,XU C J,XU C.Ghost-Net:More features from cheap operations[C]//2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR).Seattle,WA,USA:IEEE,2020:1577-1586.

[41] WANG Q L,WU B G,ZHU P F,LI P H,ZUO W M,HU Q H.ECA-net:efficient channel attention for deep convolutional neural networks[C]//2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR).Seattle,WA,USA:IEEE,2020:11531-11539.

[42] 贺英豪,唐德钊,倪铭,蔡起起.基于改进YOLOv5 对果园环境中李的识别[J].华中农业大学学报,2024,43(5):31-40.HE Yinghao,TANG Dezhao,NI Ming,CAI Qiqi. Recognizing plums in orchard environment based on improved YOLOv5[J].Journal of Huazhong Agricultural University,2024,43(5):31-40.

[43] 黄彤镔,黄河清,李震,吕石磊,薛秀云,代秋芳,温威. 基于YOLOv5 改进模型的柑橘果实识别方法[J].华中农业大学学报,2022,41(4):170-177.HUANG Tongbin,HUANG Heqing,LI Zhen,LÜ Shilei,XUE Xiuyun,DAI Qiufang,WEN Wei. Citrus fruit recognition method based on the improved model of YOLOv5[J].Journal of Huazhong Agricultural University,2022,41(4):170-177.

[44] WOO S,PARK J,LEE J Y,KWEON I S. CBAM:Convolutional block attention module[EB/OL]. 2018:1807.06521. https://arxiv.org/abs/1807.06521v2

[45] HE J B,ERFANI S,MA X J,BAILEY J,CHI Y,HUA X S.Alpha-IoU:A family of power intersection over union losses for bounding box regression[EB/OL].2021:2110.13675.https://arxiv.org/abs/2110.13675v2

[46] 苑迎春,张傲,何振学,张若晨,雷浩.基于改进YOLOv4-tiny的果园复杂环境下桃果实实时识别[J]. 中国农机化学报,2024,45(8):254-261.YUAN Yingchun,ZHANG Ao,HE Zhenxue,ZHANG Ruochen,LEI Hao. Peach fruit real-time recognition in complex orchard environment based on improved YOLOv4-tiny[J]. Journal of Chinese Agricultural Mechanization,2024,45(8):254-261.

[47] ZHU L,DENG Z J,HU X W,FU C W,XU X M,QIN J,HENG P A. Bidirectional feature pyramid network with recurrent attention residual modules for shadow detection[C]//Proceedings of the European Conference on Computer Vision (ECCV). Cham:Springer International Publishing,2018:122-136.

[48] 熊俊涛,郑镇辉,梁嘉恩,钟灼,刘柏林,孙宝霞.基于改进YOLOv3 网络的夜间环境柑橘识别方法[J].农业机械学报,2020,51(4):199-206.XIONG Juntao,ZHENG Zhenhui,LIANG Jia’en,ZHONG Zhuo,LIU Bolin,SUN Baoxia.Citrus detection method in night environment based on improved YOLOv3 network[J]. Transactions of the Chinese Society for Agricultural Machinery,2020,51(4):199-206.

[49] HE K M,ZHANG X Y,REN S Q,SUN J. Deep residual learning for image recognition[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas,NV,USA:IEEE,2016:770-778.

[50] 熊俊涛,霍钊威,黄启寅,陈浩然,杨振刚,黄煜华,苏颖苗.结合主动光源和改进YOLOv5s 模型的夜间柑橘检测方法[J].华南农业大学学报,2024,45(1):97-107.XIONG Juntao,HUO Zhaowei,HUANG Qiyin,CHEN Haoran,YANG Zhengang,HUANG Yuhua,SU Yingmiao. Detection method of citrus in nighttime environment combined with active light source and improved YOLOv5s model[J].Journal of South China Agricultural University,2024,45(1):97-107.

[51] 余圣新,韦莹莹,方辉,李敏,柴秀娟,曾志康,覃泽林.基于改进YOLOv8 的自然环境下柑橘果实识别[J].湖北农业科学,2024,63(8):23-27.YU Shengxin,WEI Yingying,FANG Hui,LI Min,CHAI Xiujuan,ZENG Zhikang,QIN Zelin. Citrus fruit recognition in natural environment based on improved YOLOv8[J]. Hubei Agricultural Sciences,2024,63(8):23-27.

[52] MA S L,XU Y,MA S L,XU Y. MPDIoU:A loss for efficient and accurate bounding box regression[EB/OL]. 2023:2307.07662.https://arxiv.org/abs/2307.07662v1.

[53] 岳有军,漆潇,赵辉,王红君.基于改进YOLOv8 的果园复杂环境下苹果检测模型研究[J/OL]. 南京信息工程大学学报,2024:1- 13(2024- 07- 15). https://doi.org/10.13878/j.cnki.jnuist.20240410002.YUE Youjun,QI Xiao,ZHAO Hui,WANG Hongjun. Research on apple detection model in complex orchard environments based on improved YOLOv8[J/OL]. Journal of Nanjing University of Information Science&Technology,2024:1-13(2024-07-15).https://doi.org/10.13878/j.cnki.jnuist.20240410002.

[54] ZHANG H,XU C,ZHANG S J.Inner-IoU:More effective intersection over union loss with auxiliary bounding box[EB/OL].2023:2311.02877.https://arxiv.org/abs/2311.02877v4.

[55] 吕石磊,卢思华,李震,洪添胜,薛月菊,吴奔雷. 基于改进YOLOv3-LITE 轻量级神经网络的柑橘识别方法[J].农业工程学报,2019,35(17):205-214.LÜ Shilei,LU Sihua,LI Zhen,HONG Tiansheng,XUE Yueju,WU Benlei. Orange recognition method using improved YOLOv3-LITE lightweight neural network[J]. Transactions of the Chinese Society of Agricultural Engineering,2019,35(17):205-214.

[56] REZATOFIGHI H,TSOI N,GWAK J,SADEGHIAN A,REID I,SAVARESE S. Generalized intersection over union:A metric and a loss for bounding box regression[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR).Long Beach,CA,USA:IEEE,2019:658-666.

[57] 王卓,王健,王枭雄,时佳,白晓平,赵泳嘉. 基于改进YOLOv4 的自然环境苹果轻量级检测方法[J]. 农业机械学报,2022,53(8):294-302.WANG Zhuo,WANG Jian,WANG Xiaoxiong,SHI Jia,BAI Xiaoping,ZHAO Yongjia. Lightweight real-time apple detection method based on improved YOLOv4[J].Transactions of the Chinese Society for Agricultural Machinery,2022,53(8):294-302.

[58] 曾俊,陈仁凡,邹腾跃.基于改进YOLO 的自然环境下桃子成熟度快速检测模型[J].南方农机,2023,54(24):24-27.ZENG Jun,CHEN Renfan,ZOU Tengyue.Rapid maturity detection model for peaches in natural environment based on improved YOLO[J]. China Southern Agricultural Machinery,2023,54(24):24-27.

[59] CHEN J R,KAO S H,HE H,ZHUO W P,WEN S,LEE C H,CHAN S H G. Run,Don’t walk:Chasing higher FLOPS for faster neural networks[C]//2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Vancouver,BC,Canada:IEEE,2023:12021-12031.

[60] LIU G L,REDA F A,SHIH K J,WANG T C,TAO A,CATANZARO B. Image inpainting for irregular holes using partial convolutions[C]//Proceedings of the European conference on computer vision (ECCV). Cham:Springer International Publishing,2018:85-100.

[61] GEVORGYAN Z.SIoU loss:More powerful learning for bounding box regression[EB/OL]. 2022:2205.12740. https://arxiv.org/abs/2205.12740v1

[62] 赵辉,乔艳军,王红君,岳有军.基于改进YOLOv3 的果园复杂环境下苹果果实识别[J].农业工程学报,2021,37(16):127-135.ZHAO Hui,QIAO Yanjun,WANG Hongjun,YUE Youjun.Apple fruit recognition in complex orchard environment based on improved YOLOv3[J]. Transactions of the Chinese Society of Agricultural Engineering,2021,37(16):127-135.

[63] WANG C Y,MARK LIAO H Y,WU Y H,CHEN P Y,HSIEH J W,YEH I H. CSPNet:a new backbone that can enhance learning capability of CNN[C]//2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Seattle,WA,USA:IEEE,2020:1571-1580.

[64] YAN B,FAN P,LEI X Y,LIU Z J,YANG F Z.A real-time apple targets detection method for picking robot based on improved YOLOv5[J].Remote Sensing,2021,13(9):1619.

[65] 王乙涵.基于改进YOLOv7 的自然环境下柑橘果实识别与定位方法研究[D].雅安:四川农业大学,2023.WANG Yihan. Research on detection and localization of citrus in natural environment based on improved YOLOv7[D].Ya’an:Sichuan agricultural university,2023.

[66] DING X H,ZHANG X Y,MA N N,HAN J G,DING G G,SUN J.RepVGG:Making VGG-style ConvNets great again[C]//2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Nashville,TN,USA:IEEE,2021:13728-13737.

[67] YANG H W,LIU Y Z,WANG S W,QU H X,LI N,WU J,YAN Y F,ZHANG H J,WANG J X,QIU J F. Improved apple fruit target recognition method based on YOLOv7 model[J].Agriculture,2023,13(7):1278.

[68] 张震,周俊,江自真,韩宏琪.基于改进YOLOv7 轻量化模型的自然果园环境下苹果识别方法[J].农业机械学报,2024,55(3):231-242.ZHANG Zhen,ZHOU Jun,JIANG Zizhen,HAN Hongqi.Lightweight apple recognition method in natural orchard environment based on improved YOLOv7 model[J].Transactions of the Chinese Society for Agricultural Machinery,2024,55(3):231-242.

[69] XUE J K,SHEN B.A novel swarm intelligence optimization approach:Sparrow search algorithm[J]. Systems Science & Control Engineering,2020,8(1):22-34.

[70] GIRSHICK R,DONAHUE J,DARRELL T,MALIK J. Rich feature hierarchies for accurate object detection and semantic segmentation[C]//2014 IEEE Conference on Computer Vision and Pattern Recognition. Columbus,OH,USA:IEEE,2014:580-587.

[71] HEARST M A,DUMAIS S T,OSUNA E,PLATT J,SCHOLKOPF B. Support vector machines[J]. IEEE Intelligent Systems and Their Applications,1998,13(4):18-28.

[72] HE K M,ZHANG X Y,REN S Q,SUN J.Spatial pyramid pooling in deep convolutional networks for visual recognition[J].IEEE Transactions on Pattern Analysis and Machine Intelligence,2015,37(9):1904-1916.

[73] GIRSHICK R.Fast R-CNN[C]//2015 IEEE International Conference on Computer Vision(ICCV).Santiago,Chile:IEEE,2015:1440-1448.

[74] REN S Q,HE K M,GIRSHICK R,SUN J. Faster R-CNN:Towards real-time object detection with region proposal networks[J].IEEE Transactions on Pattern Analysis and Machine Intelligence,2017,39(6):1137-1149.

[75] DAI J F,LI Y,HE K M,SUN J.R-FCN:Object detection via region-based fully convolutional networks[C]. Proceedings of the 30th International Conference on Neural Information Processing Systems,2016:379-387.

[76] CAI Z W,VASCONCELOS N. Cascade R-CNN:Delving into high quality object detection[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City,UT,USA:IEEE,2018:6154-6162.

[77] 任会,朱洪前.基于深度学习的目标橘子识别方法研究[J].计算机时代,2021(1):57-60.REN Hui,ZHU Hongqian. Research on the method of identifying target orange with deep learning[J].Computer Era,2021(1):57-60.

[78] WAN S H,GOUDOS S. Faster R-CNN for multi-class fruit detection using a robotic vision system[J]. Computer Networks,2020,168:107036.

[79] LIU J,ZHAO M R,GUO X F.A fruit detection algorithm based on R-FCN in natural scene[C]//2020 Chinese Control and Decision Conference(CCDC).Hefei,China:IEEE,2020:487-492.

[80] 黄磊磊,苗玉彬.基于深度学习的重叠柑橘分割与形态复原[J].农机化研究,2023,45(10):70-75.HUANG Leilei,MIAO Yubin. Overlapping citrus segmentation and morphological restoration based on deep learning[J]. Journal of Agricultural Mechanization Research,2023,45(10):70-75.

[81] 荆伟斌,李存军,竞霞,赵叶,程成.基于深度学习的苹果树侧视图果实识别[J].中国农业信息,2019,31(5):75-83.JING Weibin,LI Cunjun,JING Xia,ZHAO Ye,CHENG Cheng.Fruit identification with apple tree side view based on deep learning[J].China Agricultural Informatics,2019,31(5):75-83.

[82] 贾艳平,桑妍丽,李月茹.基于改进Faster R-CNN 模型的水果分类识别[J].食品与机械,2023,39(8):129-135.JIA Yanping,SANG Yanli,LI Yueru. Fruit identification using improved Faster R-CNN model[J].Food&Machinery,2023,39(8):129-135.

[83] LU J Q,YANG R F,YU C R,LIN J H,CHEN W D,WU H W,CHEN X,LAN Y B,WANG W X. Citrus green fruit detection via improved feature network extraction[J]. Frontiers in Plant Science,2022,13:946154.

[84] MIN W Q,WANG Z L,YANG J H,LIU C L,JIANG S Q. Vision-based fruit recognition via multi-scale attention CNN[J].Computers and Electronics in Agriculture,2023,210:107911.

[85] GOODFELLOW I J,POUGET-ABADIE J,MIRZA M,XU B,WARDE-FARLEY D,OZAIR S,COURVILLE A,BENGIO Y.Generative adversarial networks[J]. Communications of the ACM,2020,63(11):139-144.

[86] 戈明辉,张俊,陆慧娟.基于机器视觉的食品外包装缺陷检测算法研究进展[J].食品与机械,2023,39(9):95-102.GE Minghui,ZHANG Jun,LU Huijuan. Research progress of food packaging defect detection based on machine vision[J].Food&Machinery,2023,39(9):95-102.

[87] 任磊,张俊,陆胜民. 脱囊衣橘片自动分拣机器视觉算法研究[J].浙江农业学报,2015,27(12):2212-2217.REN Lei,ZHANG Jun,LU Shengmin. Research on machine vision algorithm for automatic sorting of membrane-removed mandarin segments[J]. Acta Agriculturae Zhejiangensis,2015,27(12):2212-2217.